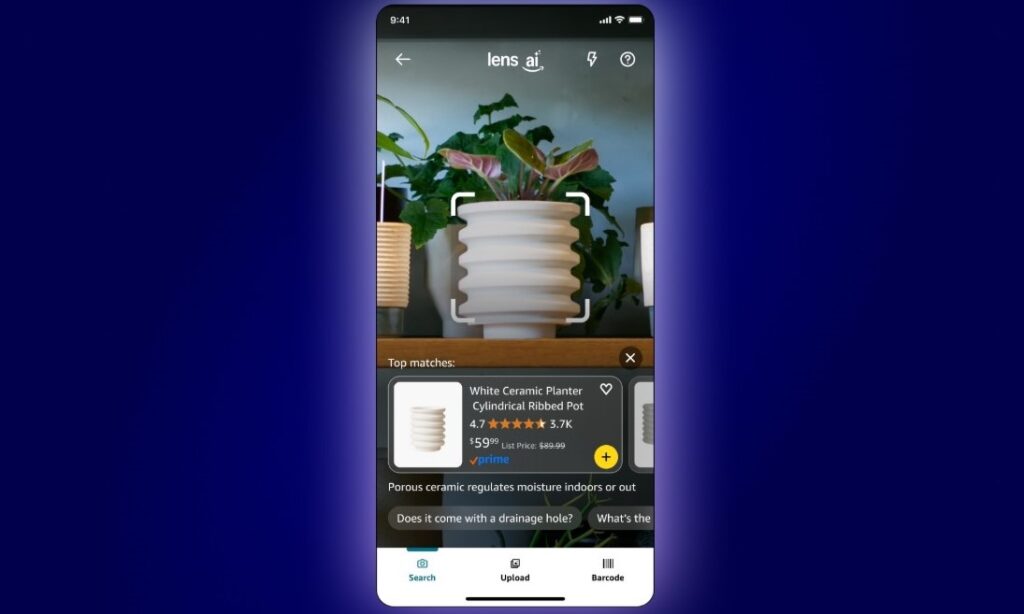

Amazon has recently introduced Lens Live, a visual search feature that aims to transform product discovery within its shopping app. This innovation allows users to find and purchase products in real time through sophisticated image recognition technology, marking a significant advancement in the integration of artificial intelligence (AI) within online shopping.

Lens Live builds upon Amazon’s existing Lens offering, which already enabled customers to conduct visual searches by taking pictures or scanning barcodes. The new functionality enhances this experience by allowing users to point their smartphone cameras at an object and instantly receive a dynamic display of potential matches from Amazon’s extensive catalog. This is achieved through a scrolling carousel that presents various options without requiring users to navigate away from the camera view. Additionally, items can be easily added to the shopping cart or wish list while browsing.

The inclusion of Rufus, Amazon’s generative AI shopping assistant, further amplifies the utility of Lens Live. As users interact with the camera view, they are presented with product summaries and suggested questions to assist in comparing options and conducting research. Rufus, which is now widely available to U.S. customers, illustrates how AI can serve as a valuable companion in the shopping process, streamlining the decision-making phase for consumers.

Underpinning this advanced functionality is technology built on Amazon Web Services’ SageMaker and OpenSearch platforms. The use of on-device computer vision plays a crucial role by allowing for immediate identification of objects before querying Amazon’s inventory. This design choice contributes to low-latency results and improves the accuracy of product matches, fostering a more efficient shopping experience.

Lens Live has been rolled out to "tens of millions" of iOS app users in the United States, with plans for broader availability among all American shoppers in the near future. After grappling with a sluggish start to 2025, Amazon reported a surge in growth during the second quarter, capturing a remarkable 8.8% of the U.S. consumer retail market. This rebound reflects not only the continuing evolution of the online shopping landscape but also the broader trend of using AI and automation to enhance consumer engagement.

The introduction of Lens Live coincides with a competitive push among major retailers to incorporate generative AI into their shopping experiences. Companies recognize the imperative to enhance product discovery and expedite the route from browsing to checkout. For small and medium-sized businesses (SMBs), learning from this trend can lead to valuable insights and actionable steps.

SMBs looking to leverage automation could benefit from implementing a visual search feature akin to Amazon’s Lens Live. One practical avenue is through platforms such as Zapier or Make, which facilitate the integration of various applications and automate workflows. For instance, consider utilizing Zapier to connect your eCommerce platform with a visual recognition tool. Through this setup, when a customer uploads an image of a desired product, the image can be processed through an AI-powered recognition API to identify matching products in your inventory.

Here’s a step-by-step approach to implementing similar automation:

-

Identify a Visual Recognition Tool: Research available AI tools dedicated to image recognition that can integrate with your current eCommerce system. Popular choices include Google Cloud Vision or Microsoft Azure’s Computer Vision API.

-

Set Up an Automation Platform: Create an account with a workflow automation platform such as Zapier or Make. Familiarize yourself with its features and how it communicates with your chosen visual recognition tool.

-

Create a Trigger Event: In your automation platform, establish a trigger event based on the criteria you set. For example, when a user uploads an image, it acts as the trigger to initiate the recognition process.

-

Process the Image: Connect the visual recognition API to process the uploaded image. As part of this step, configure the necessary API calls that will capture and analyze the image data.

-

Match Products: After recognition, use the API response to retrieve matching products from your inventory database. Ensure you have the data structure that can accommodate the results returned by the visual recognition tool.

-

Display Results: Set up an interface that will show the results to the user within your eCommerce environment, providing options to view and purchase matched items directly.

- Iterate and Optimize: Continuously monitor the performance of this automated process. Collect customer feedback to enhance the user experience, making adjustments based on usage patterns and preferences.

Implementing such automation can yield several advantages, including improved user experience, increased sales conversion, and a competitive edge in the marketplace. However, risks such as data privacy concerns and the requirement for consistent system updates must also be considered. The return on investment (ROI) can be significant, as streamlined workflows often lead to enhanced operational efficiency and customer satisfaction.

In conclusion, as eCommerce continues to evolve through AI and automation, SMBs should actively seek ways to engage with these technologies to improve their operations. Taking steps to automate product discovery via visual search can lead to a more interactive and efficient shopping experience for customers, ultimately driving growth.

FlowMind AI Insight: Embracing advancements like AI-driven visual search not only enhances customer engagement but also positions SMBs to thrive in an increasingly competitive retail landscape. By implementing automation thoughtfully, businesses can unlock new opportunities for growth and innovation.

Original article: Read here

2025-09-10 01:41:00