To design, deploy, and monitor an AI-powered automation system for a small or mid-size business, a clear understanding of the operational technology (OT) and information technology (IT) connection is essential. This step-by-step guide aims to guide non-developer operations managers through the process, ensuring you can effectively leverage AI to improve efficiency and data-driven decision-making.

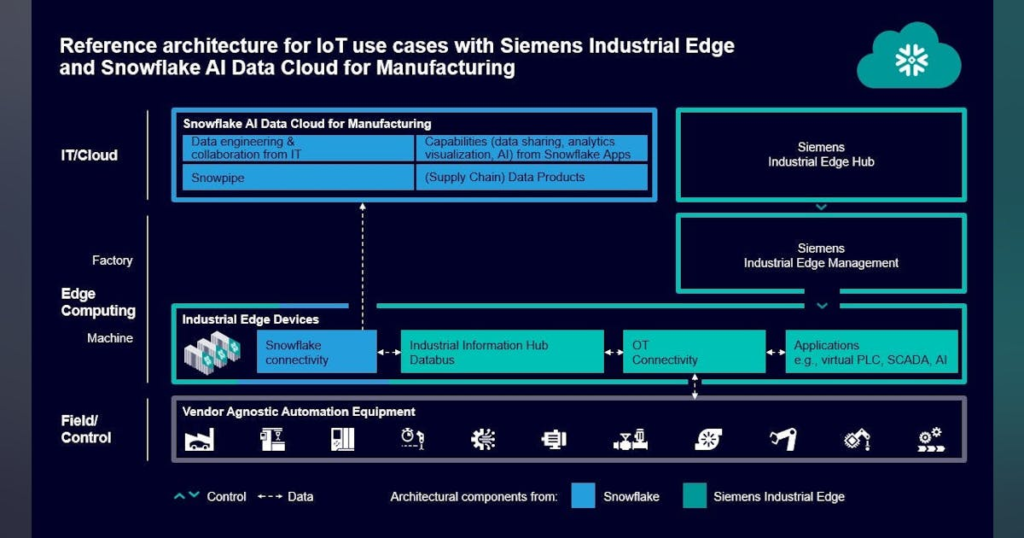

Prerequisites are your starting point. Before diving into the automation framework, ensure that you have the necessary hardware in place. This includes sensors, PLCs, and production machinery capable of connecting to data networks. You need a robust industrial edge device, such as Siemens Industrial Edge, to collect and pre-process data at the production level. Familiarize yourself with cloud data platforms like Snowflake, which will be essential for analytics and long-term data storage.

Configuration steps come next. Begin by connecting your industrial edge device to your production equipment. For instance, install the necessary sensors on machines and connect them to the edge device via appropriate communication protocols. Verify the connection by conducting a test to check if data from the sensors is being accurately captured. Input example: set up a temperature sensor on a manufacturing line to monitor operational conditions continuously. Expected outcome: real-time data feedback on temperature, logged accurately on the edge device.

After the hardware setup, focus on integrating the edge device with the Snowflake platform. Utilize the available plug-and-play applications to establish a connection between the edge device and the cloud. This process often involves configuring API endpoints and authentication credentials to ensure secure data transfer. The expected outcome is a seamless flow of data from the shop floor to the Snowflake environment.

Once the configuration is complete, testing is crucial. Run several simulations where operational data is generated, e.g., production counts, machine performance metrics, or defect rates. The AI system should analyze this data and provide insights. For this testing phase, input could be a specific production run, and expected outcomes include actionable insights such as production bottlenecks or areas for improvement.

Monitoring is the next critical phase. Set up dashboards using Snowflake’s analytics capabilities to visualize operational data continuously. This should enable real-time tracking of key performance indicators (KPIs). Regularly check these dashboards to ensure that the data being reported aligns with production realities. An example input could be a daily production report, with expected outcomes showing production efficiency metrics.

Incorporating error handling provisions is vital for a successful deployment. Design your system to identify anomalies or discrepancies in data reporting. For instance, if machine performance drops below a certain threshold, the system should notify you immediately. Setting up automated alerts will allow rapid responses to unforeseen issues, ensuring minimal downtime.

Cost control is another fundamental aspect of AI-powered automation. Initial investment costs can include hardware, cloud subscriptions, and integration services. Use the data collected through the system to analyze operational efficiencies and production output. You can conduct a cost-benefit analysis by comparing the financial metrics before and after automation. Inputs in this analysis could include savings from reduced manual labor and enhanced production rates, with expected outcomes illustrating overall profitability improvements.

As you develop your automation system, consider the implications surrounding security and data privacy. Implement appropriate cybersecurity measures to protect sensitive production and operational data. Utilize encryption for data in transit and at rest. Regularly audit user access levels to limit exposure to critical information.

Data retention policies are equally paramount to ensure compliance with relevant regulations. Establish clear parameters regarding how long data will be stored and under what circumstances it will be deleted. This will help your organization avoid unnecessary data liabilities while also adhering to privacy regulations.

Vendor lock-in can be a significant concern when implementing new technology. To mitigate this risk, consider using open standards and APIs for integration. This offers greater flexibility in moving data between different systems and can help avoid dependency on a single vendor.

Estimating ROI and ongoing maintenance is a critical component of your automation journey. To calculate ROI, account for both tangible and intangible benefits such as labor savings, increased production uptime, and enhanced product quality. Regularly review these benefits against the maintenance costs associated with cloud services and hardware upkeep.

FlowMind AI Insight: Integration of AI in your operations not only streamlines production but also opens avenues for continuous improvement, enhancing the organization’s capability to adapt to market changes. Embrace this technology with a clear focus on leveraging data insights, securing your systems, and mitigating risks to realize significant operational benefits over time.

Original article: Read here

2025-09-17 11:41:00